The unusual architecture in Los Alamos National Laboratory’s newest supercomputer is a step toward the exascale – systems around a hundred times more powerful than today’s best machines.

Materials cookbook

A Berkeley Lab project computes a range of materials properties and boosts the development of new technologies.

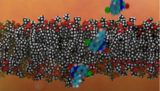

No passing zone

Lawrence Livermore National Laboratory models the blood-brain barrier to find ways for drugs to reach their target.

Chipping away

Redirecting an old chip might change the pathway to tomorrow’s fastest supercomputers, Argonne National Laboratory researchers say.

Nanogeometry

With a boost from the Titan supercomputer, a Berkeley Lab group works the angles on X-rays to analyze thin films of interest for the next generation of nanodevices.

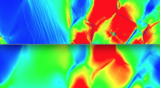

Forecasting failure

Sandia National Laboratories aims to predict physics on a micrometer scale.

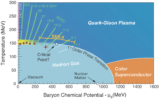

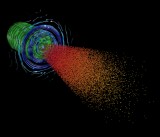

Early-universe soup

ORNL’s Titan supercomputer is helping Brookhaven physicists understand the matter that formed microseconds after the Big Bang.

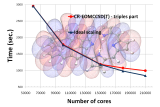

Multitalented metric

An alternative computing benchmark emerges to reflect scientific performance.

Bright future

The smart grid turns to high-performance computing to guide its development and keep it working.

Powering down

PNNL team views ‘undervolting’ — turning down the power supplied to processors — as a way to make exascale computing feasible.

Analysis restaurant

The AnalyzeThis system deals with the rush of huge data-analysis orders typical in scientific computing.

Noisy universe

Berkeley Lab cosmologists sift tsunamis of data for signals from the birth of galaxies.

Layered look

With help from the Titan supercomputer, an Oak Ridge National Laboratory team is peering at the chemistry and physics between the layers of superconducting materials.

Bits of corruption

Los Alamos’ extensive study of HPC platforms finds silent data corruption in scientific computing – but not much.

Sneak kaboom

At Argonne, research teams turn to supercomputing to study a phenomenon that can trigger surprisingly powerful explosions.

A smashing success

The world’s particle colliders unite to share and analyze massive volumes of data.

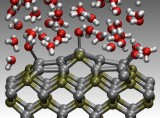

Back to the hydrogen future

At Lawrence Livermore National Laboratory, Computational Science Graduate Fellowship alum Brandon Wood applies the world’s most sophisticated molecular dynamics codes on America’s leading supercomputers to model hydrogen’s reaction kinetics.

Joint venture

Sandia National Laboratories investigators turn to advanced modeling to test the reliability of the joints that hold nuclear missiles together.

Life underground

A PNNL team builds models of deep-earth water flows that affect the tiny organisms that can make big contributions to climate-changing gases.

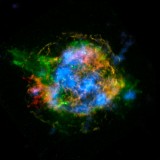

Supernova shocks

More than 10 years after simulations first suggested its presence, observations appear to confirm that a key instability drives the shock behind one kind of supernova.

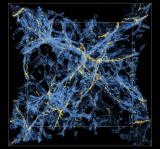

Rewinding the universe

Dark energy propels the universe to expand faster and faster. Researchers are using simulations to test different conceptions about how this happens.

Balancing act

A Pacific Northwest National Laboratory researcher is developing approaches to spread the work evenly over scads of processors in a high-performance computer and to keep calculations clicking even as part of the machine has a hiccup.

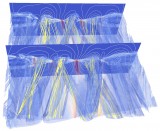

Predicting solar assaults

When Earth’s magnetosphere snaps and crackles, power and communications technologies can break badly. Three-dimensional simulations of magnetic reconnection aim to forecast the space storms that disrupt and damage.

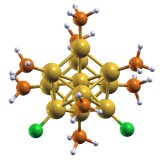

Quantum gold

Driven by what’s missing in experiments, Brookhaven’s Yan Li applies quantum mechanics to compute the physical properties of materials.

Star power

A Lawrence Livermore National Laboratory researcher simulates the physics that fuel the sun, with an eye toward creating a controllable fusion device that can deliver abundant, carbon-free energy.