Brookhaven National Lab applies AI to make big experiments autonomous.

Brookhaven National Laboratory

Turbocharging data

Pairing large-scale experiments with high-performance computing can reduce data processing time from several hours to minutes.

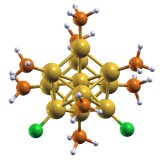

Molecular landscaping

A Brookhaven-Rutgers group uses supercomputing to target the most promising drug candidates from a daunting number of possibilities.

Meeting the eye

A Brookhaven National Laboratory computer scientist is building software to help researchers interact with their data in new ways.

Cloud forebear

A data-management system called PanDA anticipated cloud computing to analyze the universe’s building blocks.

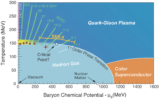

Early-universe soup

ORNL’s Titan supercomputer is helping Brookhaven physicists understand the matter that formed microseconds after the Big Bang.

A smashing success

The world’s particle colliders unite to share and analyze massive volumes of data.

Quantum gold

Driven by what’s missing in experiments, Brookhaven’s Yan Li applies quantum mechanics to compute the physical properties of materials.

Going big to study small

It takes a big computer to model very small things. And, like its namesake state, New York Blue is big. Made up of 36,864 processors, the massively parallel IBM Blue Gene/L is housed at DOE’s Brookhaven National Laboratory (BNL) on New York’s Long Island, where, among other things, it’s used to model quantum dots, or […]