Physics on autopilot

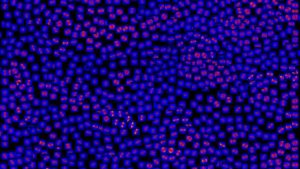

Example dataset collected during an autonomous X-ray scattering experiment at Brookhaven National Laboratory (BNL). An artificial intelligence/machine learning decision-making algorithm autonomously selected various points throughout the sample to measure. At each position, an X-ray scattering image (small squares) is collected and automatically analyzed. The algorithm considers the full dataset as it selects subsequent experiments. (Image: Kevin Yager, BNL.)

As a young scientist experimenting with neutrons and X-rays, Kevin Yager often heard this mantra: “Don’t waste beamtime.” Maximizing productive use of the potent and popular facilities that generate concentrated particles and radiation frequently required working all night to complete important experiments. Yager, who now leads the Electronic Nanomaterials Group at Brookhaven National Laboratory’s Center for Functional Nanomaterials (CFN), couldn’t help but think “there must be a better way.”

Yager focused on streamlining and automating as much of an experiment as possible and wrote a lot of software to help. Then he had an epiphany. He realized artificial intelligence and machine-learning methods could be applied not only to mechanize simple and boring tasks humans don’t enjoy but also to reimagine experiments.

“Rather than having human scientists micromanaging experimental details,” he remembers thinking, “we could liberate them to actually focus on scientific insight, if only the machine could intelligently handle all the low-level tasks. In such a world, a scientific experiment becomes less about coming up with a sequence of steps, and more about correctly telling the AI what the scientific goal is.”

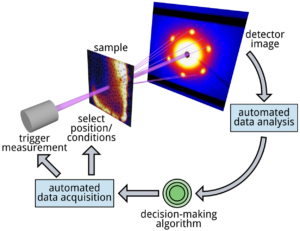

Yager and colleagues are developing methods that exploit AI and machine learning to automate as much of an experiment as possible. “This includes physically handling samples with robotics, triggering measurements, analyzing data, and – crucially – automating the experimental decision-making,” he explains. “That is, the instrument should decide by itself what sample to measure next, the measurement parameters to set, and so on.”

Although robotic instruments have a long history, Yager emphasizes the distinction between automated and autonomous methods. “Automated workflows simply follow through a pre-programmed sequence of steps,” he says. “But autonomous experiments use AI to make novel decisions in real-time. The AI algorithm can intelligently select new experiments to perform, based on the set of experiments just conducted. It’s essentially a loop that can explore a scientific problem more quickly and efficiently than a human alone could.”

Yager and colleagues are applying these concepts on scattering beamlines, which use powerful X-rays to measure materials’ atomic and nanoscale structures. This is no simple task and involves many collaborators.

An autonomous experimentation loop. By automating data acquisition, data analysis and even decision-making using AI/ML algorithms, the instrument can search through experimental problems without human intervention. (Schematic: Kevin Yager, BNL.)

“At Brookhaven, I and others at the CFN provide expertise in materials science and data analytics; Masa Fukuto and others at the National Synchrotron Light Source II provide frontier measurement tools; and the Computational Science Initiative helps us with advanced algorithms and cluster computing. We also have key external collaborators, including Marcus Noack at Lawrence Berkeley National Laboratory, who is developing the mathematics and software framework for decision-making. It’s extremely exciting to work on a problem so complicated that it requires such a broad and diverse team.”

At the highest level, the team wants to transform the way we think about experiments and to liberate scientists to focus on their data’s scientific meaning. “This will allow us to tackle previously impossible problems and accelerate the pace of scientific discovery,” Yager says.

Autonomous experimentation promises to accelerate the discovery of new materials. Such systems “can not only tirelessly explore large ranges of parameters, they can also analyze these high-dimensional spaces and identify correlations a human would miss,” Yager notes. “Moreover, we can couple autonomous instruments to simulation code, allowing it to leverage our best models for material physics. This combination can enable autonomous systems to search and discover in ways that conventional experiments cannot.”

Autonomous experimentation also seeks maximum efficiency, using instrument time as effectively as possible and “shortening the design loop researchers use to discover new materials,” he says. “One of the fun things” about the new methods “is that they can surprise you with how clever they are – even though they are implementing well-defined mathematical algorithms.”

In one experiment, the team told the AI algorithm to measure a sample in a way that maximized information gain while minimizing experimental costs, such as measurement time. They were surprised to see it suddenly measuring in a stripe pattern where it would move the sample horizontally, only rarely shifting the sample vertically.

They realized the vertical motor they were using was much slower than the horizontal motor. “Without us explicitly telling the AI about that difference, it was able both to identify it and then optimize the measurement scheme to be as fast and efficient as possible,” Yager says. “It was amazing.”

In another experiment, the team was stymied over the correct processing conditions to make a particular nanomaterial. The researchers were iterating manually and having little luck. “So we instead made a combinatorial sample, which is a gradient with every possible value of the process parameters, and had the AI explore it,” Yager says. “The autonomous experiment identified little regions exhibiting unexpected ordering. By looking specifically in these regions, we uncovered the problem with our fabrication protocol and immediately corrected it. This discovery sped up our research and identified some neat new kinds of ordering we were not expecting.”

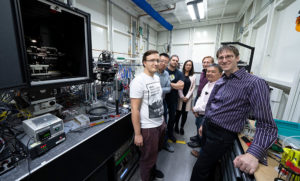

Left to right: Arkadiusz Leniart, Ruipeng Li, Marcus Noack, Esther Tsai, Gregory Doerk, Masafumi Fukuto and Kevin Yager conducting autonomous experiments at the Complex Materials Scattering (CMS) beamline. CMS is an X-ray scattering instrument at BNL’s National Synchrotron Light Source II facility, operated in partnership with the Center for Functional Nanomaterials. Researchers use a photothermal annealer (black box in left of image), developed by the University of Warsaw’s Leniart and Pawel Majewski, to process samples during measurement with X-rays, and an artificial intelligence algorithm controls the experiment. (Photo: BNL.)

Multiple advances are needed before researchers can realize the full potential of autonomous experimentation, Yager says. “We need to refine our algorithms to make them more physics-informed without giving up on the generality of the method. We also need better computational models that provide correct predictions for material behavior but are also fast enough to be used in real-time during an experiment. We need a broader range of sample reactors – that is, experimental platforms we can use to synthesize new materials on the fly, during measurement. And, of course, we need the software machinery to keep all these components coordinated.”

In the short term, autonomous methods make the most sense for well-defined but extremely large and complex experimental problems, such as finding the perfect material formulation.

“We also think it could be quite useful for real-time control,” Yager says. “For instance, guiding a material into the right structural state by actively changing it while measuring its properties.”

At the X-ray scattering beamline, the team identified three classes of experiments that will benefit from autonomous methods. “First, we can do X-ray imaging experiments, where we scan from point to point along a sample surface more efficiently,” Yager says. “Second, we can explore pre-made libraries of samples to map out phase diagrams. Third, we can actively control material processing – via temperature or solvent, for instance – to drive systems into target states.”

Yager thinks every aspect of science could benefit from these concepts. “It’s a paradigm shift in which we stop thinking of experiments as isolated tasks and instead try to build discovery platforms by seamlessly integrating different experimental systems. Basically, doing an experiment will become like writing software, where you mix and match modules and are limited only by imagination.”

About the Author

The author is a freelance science and tech writer based in New Hampshire.