ARM wrestling

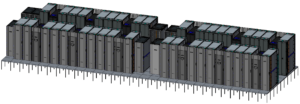

A computer-automated design conception of Sandia National Laboratories’ Astra supercomputer, used to work out the floor layout of the supercomputer’s compute, cooling, network and data-storage cabinets. (Illustration: Hewlett Packard Enterprise.)

The kinds of chips that run your cellphone and digital television are powering a high-performance computer that could model nuclear weapons.

At Sandia National Laboratories, computational scientists are testing one of the first supercomputers based on ARM processors, the technology found in smart phones and cars. The new system, called Astra, is the first project in the Vanguard program, an effort to expand the large-scale computer architectures available for the Department of Energy National Nuclear Security Administration (DOE NNSA).

Vanguard bridges a development gap between small testbed systems and full-scale production supercomputers that handle the workhorse calculations involved in stockpile stewardship. Since the United Stated ended nuclear weapon testing more than 25 years ago, NNSA has relied on sophisticated computational simulations to maintain the devices and keep them safe. These models must run on large-scale hardware to achieve the best accuracy and efficiency.

To support these efforts, computational scientists at Sandia and the other NNSA national security laboratories – Lawrence Livermore and Los Alamos – need hardware technologies that run codes to model complex scenarios in chemistry, physics, materials science and engineering. “But we’ve seen over past years that our choices for technologies have become a little more limited than we would like,” says James Laros, the Vanguard project lead at Sandia’s Albuquerque, New Mexico, site. Astra is the first of a potential series of projects to test new hardware.

Investigating new computer equipment on this scale can involve risks. A technology might look promising when installed on a handful of nodes, but scaling it up can bring a slew of challenges, Laros says. The Vanguard program supports research on prototypes that are sufficiently large to test production codes but without the immediate pressure to support day-to-day operations.

“ARM would be a nice addition if it is feasible to use for HPC (high performance computing),” Laros says. He and colleagues began testing the first 64-bit ARM technology in small test-bed systems in 2014. ARM processors are ubiquitous in consumer electronics, Laros notes, but until recently haven’t performed well enough to compete with the workhorse IBM or Intel CPUs in today’s largest computers.

A newer family of ARM processors is more powerful and offers other advantages, including better memory bandwidth. “We have really twice as many memory channels on the ARM chip as we have on some of our production server-class processors in our other system,” says Simon Hammond, a Sandia research scientist. Many of the NNSA applications require integrating data from various parts of the hardware, he notes. Twice the memory channels could at least double the speed at which hardware access those various data components. “It will make a really big difference to our codes.”

The Astra system uses Cavium ThunderX2 64-bit Arm-v8 microprocessors. The system includes 2592 nodes. In November 2018, it achieved a computation speed of 1.529 petaflops, more than 1.5 million billion calculations per second, placing 203rd on the TOP500 list. It will not rival the fastest supercomputers but will be powerful enough to test NNSA codes and compare with existing production-class systems.

The Astra planning started in June 2017, as Laros and his team worked with vendors on an ARM-based approach. In fall 2018 the team started its initial assessment of Astra’s performance and accuracy, using unclassified codes as benchmarks. Starting this month, researchers expect to try restricted programs. Eventually, they’ll directly compare Astra’s performance on NNSA’s classified codes and the overall user experience with the same metrics on existing production systems.

The prototype system allows the team to focus on analyzing and improving Astra’s performance and on whether they can boost efficiency and accuracy. “What do we need to do to the environment to make the applications run on this pathway?” Hammond asks. “It’s a perfect combination of still trying to solve the hard problems with real applications but not being under the gun to do it really, really quickly.”

Throughout Astra’s development and optimization, the Vanguard team has collaborated with Westwind Computer Products Inc. and Hewlett Packard Enterprise. Hammond compares these architectures to stacked plates whose base includes layers from suppliers that map some of the basic structure and primitive functions into the system. “So it’s important that we get those base plates really done well,” he says. “Above that then there’s a load of other plates, and that’s the bit where the DOE and the teams here at Sandia and (Los Alamos and Livermore) will add all their value in their applications.”

Laros expects Vanguard will go beyond examining processors to, for example, testing advanced network technologies. “Just like memory bandwidth can be a bottleneck, networks can also be a bottleneck because our applications run on thousands to tens of thousands of nodes in cooperation.”

The Vanguard program won’t solve all the challenges inherent with large-scale computer platforms, Laros says, but it will create a path for testing ARM and other new technologies and comparing their performance to current computer architectures. If someone wants to propose a future ARM-based production system, his team will know whether it’s viable after it tests Astra.

Vanguard’s goal is not to build the fastest or best architecture, Laros stresses. “It’s there to try to give us more choices.”

About the Author

Sarah Webb is science media editor at the Krell Institute. She’s managing editor of DEIXIS: The DOE CSGF Annual and producer-host of the podcast Science in Parallel. She holds a Ph.D. in chemistry, a bachelor’s degree in German and completed a Fulbright fellowship doing organic chemistry research in Germany.