Optimized for discovery

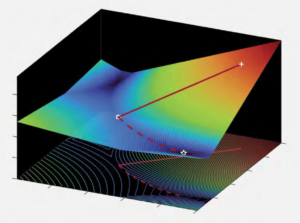

A back box optimization algorithm can increase a multigrid simulation’s efficiency. Seen here: a three-dimensional application of an

unoptimized simulation (plus sign) and an improved, faster approach (box). The star indicates a valley, or a computational-energy low point with a shorter simulation run time than possible without the algorithm. (Image: Stefan Wild.)

Stefan Wild came to Argonne National Laboratory in the summer of 2006 for what was to be a four-month summer practicum. He’s never really left.

Wild discovered a highly collaborative, open-science research environment that’s optimal for him. It’s fostered Wild’s dynamic career, including a 2020 DOE Early Career Research Program award.

“I would never have predicted then that I’d be at Argonne for more than a decade, and the only reason I’m here is because of my practicum,” says Wild, a 2005-2008 Department of Energy Computational Science Graduate Fellowship (DOE CSGF) alumnus who completed his Ph.D. in operations research at Cornell University.

Wild’s scientific specialty is developing mathematical optimization techniques to improve how models and simulations perform on high-performance computers. On his practicum he developed an important algorithmic addition to TAO, the lab’s toolkit for advanced optimization, and on graduation was hired as an Argonne Director’s Postdoctoral Fellow. For the past dozen years Wild has steadily risen through the scientific and management ranks to his current position directing the Laboratory for Applied Mathematics, Numerical Software and Statistics (LANS).

Wild says Argonne computing’s flat organizational structure has been perfect for his professional growth. He oversees about 60 scientists and engineers but still spends about three-quarters of his time on his research. The lab’s interdisciplinary nature helps Wild find intriguing ideas, whether while playing on Argonne’s Ultimate Frisbee club (which has led to novel lab collaborations) or leading brainstorming meetings of LANS’ dozen-member senior research council.

This environment also nurtures Wild’s math-with-a-mission research approach. “What really attracts me is doing math to advance the basic sciences,” says Wild, who in late 2020 was elected chair of the Society for Industrial and Applied Mathematics Activity Group on Computational Science and Engineering.

His approach has led to a remarkably wide range of collaborations, from using computational techniques to improve cancer research to automating production processes for novel nanomaterials.

An early collaboration with practicum advisor Jorge Moré led to Wild’s focus on optimization for scientific machine learning, a core artificial intelligence sub-technology. In 2011 they developed POUNDERS, an optimization code that dramatically reduces the time required to calibrate atomic nuclei simulations on supercomputers, as part of DOE’s cross-lab Universal Nuclear Energy Density Functional SciDAC (Scientific Discovery through Advanced Computing) collaboration. “What used to take physicists a year could now be done in a couple hours,” Wild says.

Now with his DOE early-career award he’s focused on a five-year effort to tackle one of the gnarliest optimization challenges: derivative-free optimization. In classical mathematical methods, a system is optimized by precisely modeling the effect an input variable has on the system and tuning the variable to get a desired outcome. For example, the optimization could explore the ideal number of running processors to minimize the energy a supercomputer uses for a simulation. However, researchers use derivative-free, or black-box, optimizations for systems that are so complex that they don’t have all the underlying mathematical equations to describe them.

“The only thing that you know about a black-box system is what you get back from evaluating it,” explains Wild, who with Argonne colleagues co-wrote a 2019 Acta Numerica cover-story review of state-of-the-art derivative-free optimization methods. “It’s like a Magic 8 Ball: You ask it a question, and you shake it up, and then it gives you an answer. You learn something about that Magic 8 Ball from that sort of oracle or inputoutput relationship and use this to optimize the system.”

Black-box optimizations are a foundational component of scientific machine-learning efforts, including in preparation for one of the first exascale supercomputers, Aurora, developed with AI in mind and coming to Argonne in 2022. Near-term noisy intermediate-scale quantum computers DOE labs are pioneering also are ripe for black box optimization, Wild says.

Asked if managing the $2.5 million early-career award feels onerous, Wild laughs. “That’s the fun part,” he says. “I get to choose what I work on and expand my group to have postdoctoral researchers and students working with me on these foundational problems. And so, this is really exciting because I get the chance to allow some of my enthusiasm and the fact that I love my job inspire the next generation of researchers.”

Editor’s note: Reprinted from DEIXIS: The DOE CSGF Annual.

About the Author

Jacob Berkowitz is a science writer and author. His latest book is The Stardust Revolution: The New Story of Our Origin in the Stars.