Extreme 3D

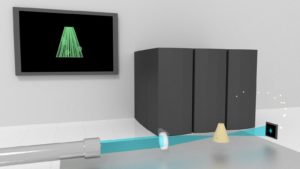

An X-ray beam (blue) is focused on a rotating specimen (yellow) and captures diffraction patterns (far right). The display in the background represents a computer-generated 3-D reconstruction of the specimen. (Image: Ming Du, Argonne National Laboratory.)

The Advanced Photon Source (APS) at the Department of Energy’s (DOE’s) Argonne National Laboratory is undergoing an $815 million upgrade, known as APS-U, to create a more powerful X-ray facility. When complete, it will deliver beams with 100 to 1,000 times greater brightness – and generate enough photons to penetrate even centimeter-thick samples.

APS is akin to a giant microscope. It produces extremely bright X-rays to illuminate dense materials and reveal their structure and chemistry at the molecular and atomic scale in three dimensions.

Although the upgrade will let researchers image thicker samples (1-centimeter with 10-nanometer resolution) than possible today, X-ray microscopes have a limited depth of field (DOF). This is problematic because when the sample is too thick, the beam can’t clearly resolve anything beyond the DOF. Imaging a 1-centimeter sample with tens of microns’ resolution is easy with today’s APS, for example, but 10-nanometer resolution will require APS-U.

So researchers are seeking a solution to the problem now, before the facility comes on line. “We’re developing a method to image samples beyond the DOF to maximally exploit the potential of APS-U in imaging thick samples,” says Ming Du, an Argonne postdoctoral researcher and principal investigator of an ASCR Leadership Computing Challenge project. Its goal: employ Theta, the lab’s 11.7 petaflops-capable computer, to perform large-sample 3D image reconstruction.

X-ray microscopy samples typically are assumed to be thin – tens of microns for 10- to 20-nanometer resolution – and beams travel in straight lines within them. Researchers need this level of resolution to image subcellular tissue (such as neuron mapping for brain tissues) or integrated circuit chips.

“This is the fundamental assumption for most X-ray 3D imaging techniques,” Du says. “But when the sample is beyond DOF, we can no longer ignore the diffraction of X-ray beams in the sample.” In diffraction, X-rays diverge from their initial direction.

Scientists must properly model diffraction to get a clear picture of the entire sample. “Such a need arises frequently for nanoscale imaging, because the DOF shrinks quickly as we improve our resolution,” Du says. “When we image with 10-nanometer resolution, the DOF could drop to just a few micrometers when using a beam energy of approximately 10 keV – any samples beyond that thickness will require a new 3D reconstruction method.”

Today, the X-ray imaging community works with nanoscale samples that are measured in megapixels. But researchers on this project are “looking ahead to see how we can do gigapixel or beyond,” says Chris Jacobsen, Argonne distinguished fellow and a professor of physics and astronomy at Northwestern University. “We’re trying to push the boundaries of what we can do by combining fine-scale imaging with large samples.”

Building on earlier proof-of-concept work, Du and colleagues used a method called “multislice propagation” – essentially a simulation technique in which a 3D sample is imagined as 2D solid slices separated by vacuum gaps.

“From the solid slices, we can calculate how the sample scatters X-ray beams,” Du says, “and from the vacuum gaps we can calculate how the X-ray beams propagate by themselves.”

They used multislice propagation to account for X-ray beam diffraction and applied it in two different X-ray 3D imaging setups – ptychographic tomography and holographic tomography.

For both setups, the team used the multislice method to compute a more accurate prediction of the measured data starting from a guessed sample and then to update the sample’s values in the direction given by a mathematical quantity called the gradient. This reduces the difference between the predicted data and the experimentally measured data.

By running numerical simulations on Argonne Leadership Computing Facility supercomputers, the researchers discovered that their method provided better quality than the result based on thin-sample approximation.

For their numerical simulations, the researchers used automatic differentiation (AD), a technique that comes with popular deep-learning libraries, such as TensorFlow and PyTorch. “AD is the functional math engine that drives the training of neural networks,” Du says, “but it can also play an important role in our physics-based method.”

The AD technique makes it easy to find the gradient, which lets the researchers know which direction to update sample values – without manual calculations. “This used to be done all by hand,” notes Sajid Ali, a Northwestern University Ph.D. student and a participant in Argonne’s W.J. Cody Associates summer program. “Our new processes are going to make it a lot easier.”

AD also provides “enormous flexibility, making it easy to adapt the multislice model to either ptychographic tomography or holographic tomography, or even other imaging setups,” Du says. “We now have the capability to perform thick-sample 3D reconstruction for a wide range of imaging techniques with the same code framework.”

To image large-volume samples, the researchers developed and compared methods to efficiently evaluate the multislice model using a high-performance computer. Later this year, Aurora, an exascale computer, will debut as part of APS-U to handle the gigantic data load that comes with a hundred- to thousand-time increase in brightness.

The researchers have developed a distributed implementation of two algorithms – the tiling-based Fresnel multislice method and the finite difference method. “They differ in terms of mathematical foundation and parallelization,” Du says.

As a further step toward scaling their algorithms for large-size samples, they developed a parallelization method that lets them solve the 3D reconstruction problem via the tiling-based Fresnel multislice method to evaluate the multislice model.

Du explains: “It’s designed to run on high-performance computers and decouples sample data storage and gradient computation, which requires much less memory per computing node versus the more conventional and easier-to-implement method of data parallelism.”

And since the researchers use AD functionality from AI tools, it has made their implementation both flexible and versatile. Du adds that “using AD from these tools will make it easier to interface with routines based on artificial neural networks, which would yield better reconstruction quality by combining neural networks and physics-based models.”

The researchers’ work addresses the problem of imaging thick samples beyond the DOF limit and shows potential to enable APS-U “to fully exploit its capability of large-sample 3D imaging,” Du says. “Beyond this, our work using AD provides a good demonstration of using it to build a versatile framework for image reconstruction.”

About the Author

The author is a freelance science and tech writer based in New Hampshire.