Multiphysics models for the masses

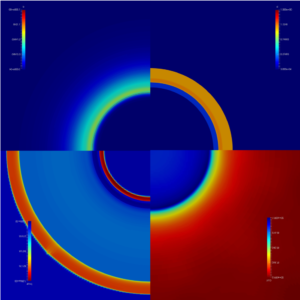

Upper right panel: a simplified inertial confinement fusion capsule as the simulation begins; 1.5 nanoseconds later (lower right), radiation energy impinges on the capsule, heating its material; the dense shell of the capsule (upper left ) responds to this heating by expanding, with its inner edge moving inward and its outer edge outward; the capsule material’s expansion causes either side of the expanding shell (lower left) to heat. (Image: Los Alamos National Laboratory.)

As of 2016, the United States had just over 4,000 warheads in its nuclear stockpile, down from a peak of 31,255 during the Cold War. Some of those weapons have been around for 40 years; the newest are at least a couple of decades old.

These warheads were never designed to last forever, and they can fail or become unpredictable as they age. The United States banned nuclear weapons testing in 1992. So, as stewards of the nuclear stockpile, how do the Department of Energy (DOE) national security laboratories maintain its safety and readiness?

Their main tool is computer simulation. Ideally, the various physical processes at play can be captured in a single model. Such multiphysics codes develop mathematical descriptions of the relevant processes and discretize them into algebraic systems that supercomputers solve, producing data for analysis.

Like the stockpile itself, however, the computing architectures the current stewardship simulation codes were written for are decades old. The machines can be replaced, but at some point, fundamental changes in the way modern computers are built – massively parallel architecture, allowing deep memory hierarchies and more complex data storage and processing – will require an overhaul of multiphysics production codes. The multiphysics models’ fidelity and complexity also will continue to evolve with computational physics.

“The rate at which computers – and the questions we use them to answer – are changing today is enough to drive a person mad,” says Aimee Hungerford, a computational physicist at DOE’s Los Alamos National Laboratory (LANL).

Updating codes to run more efficiently on current and next-generation computing architectures has been a goal of Advanced Technology Development and Mitigation, a subprogram of the DOE National Nuclear Security Administration’s Advanced Simulation Computing initiative.

To meet this need, LANL has launched the Ristra project, for which Hungerford is the physics lead. Ristra oversees development of a user-interface tool called the Flexible Computational Science Infrastructure (FleCSI). FleCSI will essentially act as an abstraction layer for lower-level programming models, such as the Stanford University-based Legion system, making it easier for physicists to implement their models.

It’s not just for nuclear stockpile codes. FleCSI can serve as a wrapper for multiphysics simulations in a variety of fields of interest to the lab, including astrophysics, materials science and atmospheric science.

“When we first began thinking of updating our production codes for new hardware, we knew we had to think hard about what data are actually needed to do a computation,” says LANL computational scientist Ben Bergen, who leads FleCSI development. “Also, where are the data located? Are they on a different processor or a different part of the chip and, if so, what is the lag time to get them? There were a lot of these types of questions to answer.”

As much as possible, the team is designing FleCSI to unburden users of the need to answer these questions, automating data movement and the assignment of tasks to various processors, Bergen says. “Our goal is to make a robust enough abstraction layer so that physicists can concentrate on the physics and they don’t get gummed up in all of the devilish computer science details.”

Other motivations: portability, the capacity to maintain software across different computing architectures, and adaptability. “People have been doing the kind of mathematics leading up to our current simulation capabilities for at least the last 400 years,” Bergen says. “We needed FleCSI to be flexible enough that if somebody develops a new physics package you could just drop it in.”

Bergen and his team want FleCSI to handle a variety of disparate applications, so every year they try a simulation that encompasses different areas of physics. For example, last year they focused on inertial confinement fusion (ICF), which is initiated by rapidly heating or compressing a small fuel pellet. ICF researchers study hydrodynamics and radiation when they heat the pellet with lasers to trigger an implosion; other physics also govern system behavior. Having something like FleCSI would provide an optimal way to simultaneously solve all of these physics equations in combination.

“With these projects, we work toward an integrated problem that then drives all the pieces to come together in working order,” Hungerford says. “We then ask, ‘What part of that demonstration was agonizing and what part was really smooth?’ That tells us how well the interface layer is working.”

FleCSI is in year four of a six-year development process. Over the next two years, the team plans to add more data-structure support. The researchers will also implement control replication – a method for scaling problems for Legion – and dependent partitioning, or dividing up a problem domain to run across distributed-memory architectures.

The group also wants to add an adaptive Lagrangian-Eulerian code, which would enable solution of multiphysics equations from several frames of reference. “This would be a little bit like simultaneously modeling a dye diffusing as it is floating down a river and standing on the river bank and watching it flow by,” Bergen says.

The team also will focus on supporting different groups using FleCSI, but only after further improving the user interface. “Right now, we’re like proud parents,” Hungerford says. “However, we want our child to behave a bit better before we invite anyone over to meet her.”

Even as it works to make FleCSI more accessible, the team doesn’t want people to think of it as “a universal solution for all computational physics and computer science challenges,” Hungerford says. “That’s not the goal. Rather, FleCSI is attempting to be a helpful component in efforts to build solutions for the challenges people choose to take on.”

About the Author

Chris Palmer has written for more than two dozen publications, including Nature, The Scientist and Cancer Today and for the National Institutes of Health. He holds a doctorate in neuroscience from the University of Texas, Austin, and completed the Science Communication Program at the University of California, Santa Cruz.