Climate customized

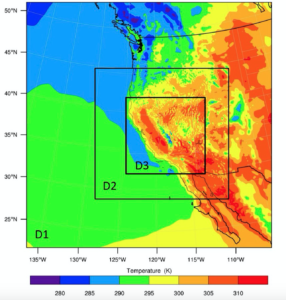

Temperature (K) predictions from 10-year high-resolution regional climate models Argonne conducted for a fire-risk mapping project. The outermost domain (D1) has a spatial resolution of 18 kilometers. The two inner domains have resolutions of 6 kilometers (D2) and 2 kilometers (D3), respectively. (Image: Argonne National Laboratory.)

Superstorm Sandy showed how coastal storms can disrupt energy infrastructure. Along the Northeast coast, storm surge and strong winds downed lines, flooded substations and damaged or shut down dozens of power plants.

Eight million people in 21 states lost power and faced costly repairs. Although by traditional measures it was considered a 100-year storm, Sandy-like extreme weather events are becoming more frequent, driven by climate change. As utility companies plan to construct or update power plants, transmission lines and other facilities and equipment, they must factor in how climate change effects dictate how and where to build.

Responding to this growing need for information, the Department of Energy’s (DOE’s) Argonne National Laboratory is in the midst of an initiative called Climate Data for Decision Making. Organized by Argonne’s Science & Technology Partnership and Outreach directorate (STPO), the one-year pilot is working with energy providers and others to generate climate models of varying time scales, geographical areas and complexities to assess flooding, wildfires, drought and other climate-driven threats.

At the Argonne Leadership Computing Facility (ALCF), a DOE Office of Science user facility, Environmental Science Division (EVS) climate modelers collaborate with computer scientists to slice the world into a three-dimensional grid and estimate climate conditions in each chunk for years into the future. Generating these models is computationally intense, progressively translating into finer grid slices over shorter time spans. The finer the resolution, the more accurate the predictions about climate effects in a given location.

The most accurate simulations are run on supercomputers, such as the ALCF’s Mira, which “allows us to run climate models with cutting-edge spatial and temporal resolution,” says Yan Feng, an EVS climate scientist and an initiative co-lead.

Feng and colleagues have created a 10-year map of fire-risk predictions for the California Public Utilities Commission. They modeled surface wind speeds, temperature extremes, air pressure levels and precipitation change relative to humidity change, all at hourly intervals at a 2-kilometer resolution. The team also used soil moisture, vegetation distribution and other data.

The team eventually built out the model to cover large parts of California and Nevada and extended it to 6-kilometer resolution for surrounding Western states, then to 18 kilometers for the entire West and part of the Pacific Ocean. The simulations burned through 7 million core-hours on Mira.

“This project really pushed us to the limit in terms of what we can do with the current regional climate model,” Feng says, noting that such detail was “not even imaginable a few years ago.”

Such high-resolution models necessitate tradeoffs between duration, size and meteorological variables that can be simulated. Additional compromises come into play when combining commonly used climate models with more boutique-size data sets, such as soil moisture.

In another project, for example, the team had to estimate flood vulnerability for Northeast coastal power facilities. The researchers traded resolution – 12-kilometer grids – so they could extend predictions over 20 years to the entire continental United States.

“When we run climate models, we can estimate things like temperature, wind speeds and precipitation,” Feng says. “But most of the time when I talk to private-sector clients, they don’t really care about the numbers. They just want you to tell them, at this location, in the next 10 years, what the chances are of extreme weather events such as heat waves or flooding or drought.”

Because the team’s climate models weren’t necessarily designed for extreme-weather predictions, , they must be customized to meet client needs – which may be difficult to pin down. “They’ll ask us ‘What do we need to know about future climate scenarios and how should we prepare’?” Feng says. “So we occasionally help them define the problem, which takes a lot of communication.”

Other projects the team has tackled over the past year range from the mundane – identifying for wind-energy companies wind-speed intensity and seasonal variations – to the complicated. For example, the team has done multilayer modeling for utility sectors and other agencies. First, a hydrologist modeled citywide street runoff after various extreme precipitation events. Next, the team used a sub-kilometer model to investigate how fires in the region might spread. Finally, the researchers predicted how smoke and other pollutants from wildfires might affect regional air quality.

“These kinds of combination tools are not yet available,” Feng says, “so we had to assemble different models.”

Customizing tools is a key part of the initiative’s future, Feng says. The team has been tracking all the tailored analyses it’s created so far and has identified several that would be useful to combine in off-the-shelf packages to share with the climate-modeling community.

As the one-year project winds down, STPO is connecting Feng’s group with sponsors from the private sector. Besides creating specialized modeling packages, the team’s also looking at ways to port its climate data into the popular GIS framework that many private-sector clients are already comfortable using.

“It amazes me how warmly our work has been embraced by industry,” Feng says. “We’re really doing what we can to steer our research to effectively respond to our clients’ needs and meet them were they’re at.”

About the Author

Chris Palmer has written for more than two dozen publications, including Nature, The Scientist and Cancer Today and for the National Institutes of Health. He holds a doctorate in neuroscience from the University of Texas, Austin, and completed the Science Communication Program at the University of California, Santa Cruz.