Powering down

Designers of exascale computing systems must get a handle on the power cost of performing a quintillion calculations per second. Above: Cables in one of 30 cabinets that comprise Edison, a Cray XC30 supercomputer at the National Energy Research Scientific Computing Center. (Photo: Lawrence Berkeley National Laboratory.)

If supercomputing is to reach its next milestone – exascale, or the capacity to perform at least 1 quintillion (1018) scientific calculations per second – it has to tackle the power problem.

“Energy consumption is expensive, and usually the cost of maintaining such a large-scale system is as expensive as its price,” says Shuaiwen Leon Song, a Pacific Northwest National Laboratory staff research scientist. Already the average electricity used by each of today’s five fastest high-performance computing (HPC) systems equals that consumed by a city of 20,000 people, a paper Song coauthored notes. Such high power also could cause high temperatures that contribute to system failures and shorten equipment lifespan, Song adds. “Therefore, saving large amounts of energy will be very necessary as long as performance is not notably affected.”

The paper, presented at a conference earlier this year, offers a counterintuitive tactic to reach that goal: essentially turning down the power supplied to each of the myriad processors comprising an exascale system.

The technique, called undervolting, lowers on-chip voltages below currently permissible norms even as the number of power-hungry processors soars. Undervolting has risks, as malnourished transistors may be more likely to fail. But the team of six researchers says it has found a testable way to keep supercomputers running with acceptable performance by using resilient software.

The authors used modeling plus experiments and performance analysis on a commercial HPC cluster to evaluate “the interplay between energy efficiency and resilience” for HPC systems, the paper says. Their combination of undervolting and mainstream software resilience techniques yielded energy savings of up to 12.1 percent, early tests found.

Computers normally work within the limits of dynamic voltage and frequency scaling (DVFS), Song says. DVFS operates processors at voltages that are paired with specific frequency levels – the clock speeds at which processors run. If a voltage is less than what a given frequency requires or drops below the prescribed DVFS range, the most sensitive transistors can produce errors. That’s why “most commercial machines do not give users the option to conduct undervolting,” Song says.

Some are soft errors, he says – common transient faults such as memory bit flips that computers can correct on the fly. Hard errors, in contrast, often stop processors or crash the entire system.

Soft-errors undervolting can be handled with mainstream software-level fault tolerance techniques, the study suggests. Meanwhile, under the team’s proposed techniques, operating frequencies can remain fixed and maintain computational throughput rather than varying with voltages.

In their research, the authors first evaluated the pros and cons of various energy-saving techniques for HPC. None analytically models “the entangling effects of energy efficiency and resilience at scale like ours,” they found. The standard DVFS approach, for instance, has “been widely applied in HPC for energy-saving purposes,” wrote the authors. They used one such DVFS program, called Adagio, as a comparison with their own method.

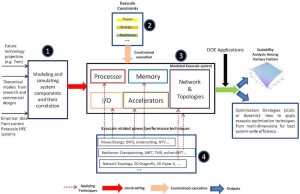

The constraints at scale for building exascale architectures. The undervolting component (shown in 4 — click to enlarge) is part of ongoing work that Pacific Northwest National Laboratories’ (PNNL) HPC group and their collaborators are conducting in the effort to build energy efficient HPC systems. (Credit: PNNL.)

Adagio doesn’t use undervolting. Instead, it raises or lowers core clock frequency within the accepted DVFS range.

“Frequency is the main target here,” Song says, with the program automatically adjusting clock speed based on its predictions of the computational workload. “Commonly, if you use a lower frequency at the right moment, you may achieve energy savings without affecting the performance. But with the state-of-the art DVFS, you cannot manipulate the voltage flexibly.”

Drawing on their modeling studies of various options, his group began with Adagio’s prediction of what frequency to use. But instead of letting voltages vary with frequencies, the researchers kept voltages unchanged at the lowest levels that can maintain performance.

If transistors began failing under this low-voltage diet, the scientists could re-evaluate which resilience software approach could tolerate such glitches, Song says. “So we were able to save further power consumption.”

The researchers tested their ideas on a 64 processor-core cluster equipped with special hardware that let them take fine-grained performance and power measurements. It also was able to lower on-chip voltages without altering frequencies.

Using this setup, they found energy could indeed be further reduced without significantly harming performance or causing system crashes. “On average, combining undervolting and Adagio can further save 9.1 percent more energy than just Adagio, with less than 8.5 percent extra performance loss,” their paper says. “We are able to further save energy on top of Adagio through undervolting by effectively leveraging lightweight resilience techniques.”

The paper’s lead author, University of California, Riverside, doctoral student Li Tan, was a Department of Energy-funded summer intern under Song. “We came up with this idea and worked on it together,” Song says.

To which he adds, “This is just a preliminary study to show that this research can lead to very effective energy savings and may be applied to current and future large-scale systems.”

About the Author

Monte Basgall is a freelance writer and former reporter for the Richmond Times-Dispatch, Miami Herald and Raleigh News & Observer. For 17 years he covered the basic sciences, engineering and environmental sciences at Duke University.