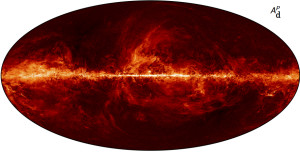

Noisy universe

The Planck satellite’s full-sky data of hot, ionized dust in the galaxy, shown here, deflated a widely publicized finding – that a twist in the polarization of microwaves filtering to Earth from space was evidence of faster-than-light inflation of the universe immediately after the Big Bang. The Planck data showed that the twist was instead introduced by galactic dust. The search for evidence of inflation in the cosmic microwave background continues. (Image: Planck Collaboration.)

From August 2009 through October 2013, the Planck space observatory gaped at the sky, looking deeply into the past. Using 72 photon detectors, it captured some of the oldest light in the cosmos – the faint glow of the cosmic microwave background radiation, CMB for short.

“These are the photons from the Big Bang; they have seen it all,” says Julian Borrill, group leader of the Computational Cosmology Center at Lawrence Berkeley National Laboratory. “They have traced the entire history of the universe, and everything that happened in the history of the universe leaves its imprint on this photon field.”

Since the 1980s, cosmologists who study this light have been devising experiments that gather more and more data from it. They then use high-performance computers to analyze the information, creating maps and tracing correlations among the pixels that comprise them.

In those connections, they seek the seeds of today’s galactic structures. Written in small CMB perturbations are the blueprints of galaxy clusters, the largest structures in the known universe. In some regions, galaxies in a cluster are lined up in a train. In other places, they’re stacked, forming vast walls. And some areas are voids with scarcely a galaxy to be found. Each of these features resulted from initial conditions of the cosmos – perturbations in the shapeless mass of the universe shortly after the Big Bang.

Sooner or later, the influx of Planck data was destined to overwhelm the number-crunching prowess of even the most powerful supercomputers. Over the past 15 years, as ground-based, balloon-borne and satellite experiments began transmitting a flood of information, the size of the data sets has increased exponentially. With current and planned ground-based projects, they promise to do so again over the next 15 years. And to manipulate the data for a map that’s a hundred million pixels by a hundred million pixels, a computer would have to perform a trillion trillion (1024) operations.

“That’s not happening anytime soon,” Borrill says.

Cosmologists need these tsunami levels of data because the faint signals that they seek are awash in irrelevant noise. The more information they have, the more likely they are to detect signals buried in it.

Borrill and his colleagues are working to sift those signals from the noise. They work closely with Reijo Keskitalo and Theodore Kisner, both computer systems engineers at Berkeley Lab. In addition to his lab role, Borrill also is a senior research physicist at the University of California, Berkeley, Space Sciences Laboratory.

Since 1997, using some of the world’s most powerful supercomputers at Berkeley Lab’s National Energy Research Scientific Computing Center (NERSC), the researchers have analyzed a growing volume of CMB data on ever-changing computer architectures. They have created algorithms and implementations that replace impossibly large calculations with simulations and approximations. They’ve designed new approaches that keep the work flowing despite bottlenecks at input and output and between processors.

After developing new approaches to analyze data from the balloon-borne experiments BOOMERanG and MAXIMA, the team now is analyzing Planck data. The group is building and using even newer tactics to generate simulations of the CMB and the satellite’s detectors to draw constraints on the noise.

“The primary (noise) source is the detectors themselves,” Borrill says. They were “exquisitely sensitive,” he says, but “every system has thermal noise.”

Unlike white noise, which can be erased in straightforward ways, the Planck detector noise is complex. “The challenge with this noise is that it has correlations over time, so it’s almost like ringing a bell,” Borrill says. Each detector kept snaring photons before oscillations from earlier photon impacts died. “So part of the noise in every observation is correlated with what happened in the immediate past,” he says.

Such multifaceted noise is extremely difficult to reduce. Part of the problem is that cosmologists have only one universe to study, Borrill says. “The trouble with having a single realization is that the variance is formally infinite. What’s the uncertainty on one realization? We have no idea.”

To constrain that uncertainty, the scientists turned to Monte Carlo modeling techniques. Using Edison, NERSC’S Cray XC30, they simulated 10,000 different skies, each as Planck would have measured it.

The simulations replicated not only the detectors’ capture of photons but also the thermal noise. By analyzing the noise’s effects on the simulated photons’ known values, the team can establish limits on the uncertainty the noise introduces. Then the researchers can go back to the real Planck data and begin to sort the signal from the noise.

Generating the simulations, analyzing the resulting data and reducing them to sky maps demanded an enormous amount of computing time: 25 million processor hours. It would have required much more if the team hadn’t devised ways to compensate for traits of high-performance machines that Moore’s law leaves untouched. Although the number of transistors in integrated circuits has been doubling every two years, as the law predicts, the input-output pipelines and the portals between processors couldn’t keep pace.

“Input-output speed never grows with Moore’s law and communication between the processors,” Borrill says, “is an overhead as well.” Whereas supercomputers’ theoretical top speeds keep up with demands from data, “the actually realized performance may not, so that’s the real challenge. Can we take our codes and obtain a sufficient fraction of that theoretical peak performance that we can actually handle the data?”

The researchers saw a way to avoid some time-consuming input-output. They began by handling their simulated data just as they did the real data. As they generated each simulation, they saved it to disk, then read the data back into the machine, which transformed the information into maps. “Once we tried to scale that up to very large supercomputers, the huge bulk of the time was being spent writing the data out to disk and then reading it back in again,” Borrill says. “And that was only going to get worse as we went to bigger and bigger supercomputers. That’s when we realized the I/O is completely redundant.”

The team now generates simulations as needed, feeding them directly into the map-making algorithm. “And so the simulation would be done during the runtime of the map-making, and we never have to touch disk,” Borrill says. “That broke that I/O bottleneck that was preventing us from reaching the theoretical peak performance of the computer.”

To save time lost in shuttling data from one processor to another, the team developed a hybrid of the OpenMP interface for parallelizing multiple threads and the MPI library for handling communications in parallel processing. In the researchers’ scheme, MPI comes into play only when communicating from one node to another. Within nodes, the system invokes multithreading parallelism characteristic of OpenMP. The hybrid took advantage of the architecture of Hopper, NERSC’s Cray XT5, with its 153,000-plus cores in 6,384 nodes. Combined with other optimizations, the approach reduced time needed for some analyses from nearly six hours to about a minute.

The future of CMB research will demand many more creative approaches. New high-performance machines will have novel architectures. The CMB community also foresees a time when all research groups will collaborate, integrating data from a range of experiments involving about 250,000 detectors compared to Planck’s 72.

That massive collaboration also will include ground-based telescopes’ data on the polarization of CMB light. With information on two types of polarization, cosmologists can validate the temperature data that has been studied.

“We are taking three things that are all theoretical and combining them in one observation that, when it’s made, will be the third Nobel Prize for CMB physics,” Borrill says.

About the Author

Andy Boyles is a senior science writer at the Krell Institute and contributing science editor at Highlights for Children Inc.