Universe in a day

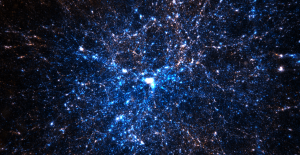

A small subset of the particles from the Dark Sky Simulation Collaboration, showing a massive galaxy cluster at the center. Particles are colored by their incoming (blue) or outgoing (red) velocity with respect to the cluster.

One day this past April, a team using 200,000 processors on Oak Ridge National Laboratory’s Titan supercomputer set out to simulate the expanding universe. The researchers began with how the universe looked 13.7 billion years ago and applied gravity.

The next day, the universe was complete and updated to its present structure. Ten weeks later, the team shared its work on its website and published it online via the preprint repository arXiv.

“The unique thing we were able to do is to take these state-of-the-art simulations and make the data nearly immediately available to the public for feedback and for future scientific work,” says team member Sam Skillman, a postdoctoral researcher with the Kavli Institute for Particle Astrophysics and Cosmology (KIPAC), a joint institute of Stanford University and SLAC National Accelerator Laboratory.

Skillman and KIPAC were part of the Dark Sky Simulations Collaboration, also known as DarkSky, which received 80 million computing hours on Titan in 2014 as part of the DOE’s INCITE (Innovative and Novel Computational Impact on Theory and Experiment) program.

“One of our big goals was to reduce the time between running the simulations and releasing the data for people outside of our direct collaboration to use in their research,” Skillman says.

Skillman’s cosmological path began in the summer of 2005, when he was part of a DOE undergraduate summer internship program at Los Alamos National Laboratory. LANL’s Michael Warren – also a DarkSky team member – had developed a code to simulate Deep Impact, a NASA mission to smash a spacecraft into a comet and analyze the debris to decipher the comet’s composition. That code, since updated, enabled the universal feat in April: the first trillion-particle simulation of the cosmos.

Skillman, who received his Ph.D. from the University of Colorado in May 2013, studies the formation and evolution of galaxy clusters, the largest gravitationally bound objects in the universe. He’s adept at computational simulation, for which he credits the DOE Computational Science Graduate Fellowship (DOE CSGF) program.

“I was eager to get in because I’d heard that it was about the best opportunity around to do self-directed research rather than be tied to a specific program,” he says. “It’s designed to take people in various scientific and engineering fields and expose them to the computational aspects of their field.”

Skillman also was drawn to another DOE CSGF feature: a three-month practicum at one of the national laboratories, during which fellows work on something different from their graduate research. At Oak Ridge, he worked with scientist Wayne Joubert on a stencil code for graphics processing units (GPUs). Titan, a Cray XK7, incorporates GPUs, which accelerate computing but consume less power than standard processors.

“The cool thing is that GPUs were originally built for gaming, and they’re what enable high-resolution graphics on your TV, computer and phone,” Skillman says. “Then about 10 years ago people started using them for scientific computation itself, for everything from the motions of molecules to the gravitational interaction between galaxies.”

Skillman says the DOE CSGF allowed him to use and develop new tools to create galaxy cluster simulations. He plied those tools to handle simulation output from DarkSky, where the data volume can be immense.

“Even just a single snapshot of the simulation data is composed of about 34 terabytes of data,” he says. In contrast, a desktop computer hard drive today is about one terabyte. “I wrote a lot of software that is then able to access the data efficiently, whether it’s on your local machine or on the Internet.” For example, he says, “if you wanted to look at a selection of galaxy clusters from our simulation, you would enter their coordinates and you would get back all the dark matter particles associated with those clusters.”

The data are available even to amateur cosmologists through the coLaboratory Chrome App, thanks to the combined efforts of DarkSky collaborator Matthew Turk at the University of Illinois’ National Center for Supercomputing Applications, Google and Project Jupyter.

The new simulations will greatly benefit two projects seeking to map the real universe: the Dark Energy Survey and the Large Synoptic Survey Telescope.

“We can use these cosmological simulations to inform the types, shapes, distributions and sizes of galaxies we might observe with a real telescope,” Skillman says. “This big data provides our best understanding of how these structures have formed over the past 13 billion years. By comparing it with real observations, we can both test our understanding of the physics that are important in our simulations as well as understand why certain observational aspects of real galaxies are the way that they are.”

About the Author

Tony Fitzpatrick writes about a wide variety of topics in science, technology and the environmental and agricultural sciences. His stories, articles and essays have appeared in newspapers and magazines nationwide. He is author of Signals from the Heartland.