Balancing act

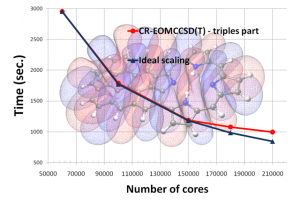

Scalability (red line) of the triples part of the re-normalized EOMCCSD(T) approach in excited-state calculations for porphyrin-coronene complex. Timings were determined from calculations on the Jaguar Cray XT5 computer system.

The cost of failure can be steep, nowhere more so than in high-performance computing. Broken node electronics, software bugs, insufficient hardware resources, communication faults – they are the bane of the computational scientist. They also are inevitable.

Addressing fault tolerance and efficient use of distributed computing has become urgent as the number of computing nodes in the largest systems has increased. Using a technique called selective recovery, Sriram Krishnamoorthy of Pacific Northwest National Laboratory and his computational science colleagues have demonstrated that petascale computing – and eventually exascale – will depend on a dramatic shift in fault tolerance.

“The basic idea of dynamic load balancing is you can react to things like faults online,” Krishnamoorthy says. “When a fault happens, we showed you could actually find what went bad due to the fault, recover that portion and only that portion and then re-execute it” while everything else continues to execute.

Typically, when high-performance computing systems fail, the process rolls back to the last checkpoint, a record of the calculation’s state, then re-executes the failed task across all nodes. That method works well, but consumes a lot of time and space on the system.

“When one process goes bad and you take a million of them back to the last good checkpoint, it’s costly,” he says. “We showed that the cost of a failure is not proportional to the scale at which it runs.”

With the new method, described last year at the International Supercomputing meeting, when there is a failure, only the faulty process is rerun, while the computer continues without interruption. The method relies on a system of checks that ignores duplications and synchronizes results in a given task. It also keeps tabs on the overall job through data structures that are globally accessible, rather than stored in local memory, thereby reducing communication.

Krishnamoorthy began his work improving the efficiency of algorithms as a graduate student at Ohio State University. When he joined PNNL in 2008, he found a collaborator in Karol Kowalski, a computational chemist working to model the behavior of organic molecules that can shift their electronic state to harvest energy from light. Later the molecules can release that energy to generate electricity. Currently, solar panels use inorganic arrays to perform the same function, but organic photovoltaics may be more flexible if they can be made efficiently. To guide a would-be builder to an organic molecular system that efficiently transforms solar energy into electricity, Kowalski models the behavior of electrons within these molecules at various excited states. But the computational power required to model even a simple system with a few electrons quickly becomes prohibitive when using standard methods.

“The problem is not just the increasing numbers of atoms but also the numerical cost of these calculations,” Krishnamoorthy says. “As you double the number of electrons, you have more than a hundred times more work to do. The question becomes how do you run these calculations as efficiently as possible, so that given a particular machine and time, what is the largest (molecular) system you can run?”

Krishnamoorthy quickly went to work to determine how to adapt Kowalski’s models for efficiency and to run the algorithms on much larger parallel systems.

“Karol wanted to work on excited states and equation-of-motion coupled-cluster methods,” Krishnamoorthy says. “These are electronic structure calculations that we are solving with varying degrees of approximation. First, you solve the time-independent solution to the electronic structure for a base structure in the ground state. Then with equations of motion you are studying a variety of excited states. My role was essentially to see what it takes to solve such a problem on the latest machine of interest.”