Getting a grip on the grid

There are two parts to each system analysis, Allemong says:

- State estimation, which takes measurements of electrical quantities on the system and, knowing the structure of the network, figures out what the measured quantities should be. The calculated values are then compared against the measured values. The end result is an estimated grid state based on the measurements.

- Contingency analysis, which computes the power grid’s condition if there are topological changes – particularly if transmission lines, transformers or generators go out of service. “That will cause the flows to all redistribute, so you’d better know whether any of those conditions can cause violations” of line and transformer thermal limits and voltage limits, Allemong says.

“In order to do the contingency calculations you have to know what the state of the network is in both topology and flows. State estimation does that,” Allemong says. “Once you have that model of flows and voltages, you compute a bunch of these outage or topology changes and what the resultant conditions would be.”

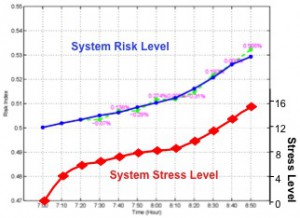

Risk levels of the western North America power grid over a morning load pick-up period. At the beginning of the period, when the system’s total power consumption is at a low level, increasing load doesn’t increase risk levels as much as when the total load is at a higher level, toward the end of the period.

The standard analysis for today’s grid operators is a single contingency – cases involving the failure of just one component. In power grid terms, that’s an “N-1” contingency case. “N” represents the total number of elements in the system and 1 is the total number of elements to fail.

“Usually, for power grid operation if you lose one element, the system should be able to maintain stability,” Huang says. But as in the 2003 outage, blackouts can be caused by multiple failures – an “N-x” contingency case.

Insurmountable problems

That makes the problem huge. For example, there are about 20,000 components in the Western Electricity Coordinating Council (WECC) system serving nearly 1.8 million square miles in the western United States and Canada. N-1 contingency analysis, therefore, requires considering 20,000 cases.

Analyzing the impact of any combination of just two WECC components (N-2 contingency analysis) failing means considering an exponentially larger number of cases – roughly 108 or 100 million cases, Huang and his colleagues, Yousu Chen and the late Jarek Nieplocha, wrote in a paper for the July 2009 meeting of the IEEE Power and Energy Society.

Analyzing possible combinations of three or more components (N-x cases) makes the problem so large even the most powerful high-performance computers couldn’t solve it quickly enough to provide useful results.

But not all grid components are created equal – a fact that lets Huang and his fellow researchers make the problem more manageable. Their contingency analysis algorithm treats the grid as a weighted graph and applies the concept of “graph betweenness centrality” to identify the most traveled paths between locations. Then it analyzes cases involving only the most critical transmission lines and components.

Components identified as having little impact on grid stability are removed from the analysis, making the problem tractable with available computing power.

The researchers’ method breaks contingency analysis into two steps: selection and analysis. That makes it well suited to run on PNNL’s Cray XMT, a computer based on “massively multithreaded” architecture. See sidebar.

It’s clear grid operators must quickly analyze multiple contingencies and take action to block problems – and that the job will take considerable computing power, even when graph analysis discards less damaging scenarios.

Allemong says grid management organizations typically analyze contingencies every few minutes, but most results are inconsequential. Efforts to get at only significant cases have had limited success, but with the weighted graph approach, “maybe Henry is on to something.”

About the Author

The author is a former Krell Institute science writer.