Learning climate

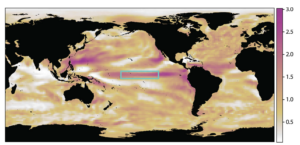

Regions of importance, as learned by an AI model, for predicting wintertime Niño3.4 sea surface temperature (SST) anomalies – indicators of central tropical Pacific El Niño conditions – given wintertime SST anomalies a year before. Purples highlight regions that are most important for predicting the anomalies, while whites highlight regions that are not important for predicting them a year in advance. The teal box indicates the Niño3.4 region the machine learning task targets. (Map: Jamin Rader.)

Weather so fascinated Jamin Rader in his high school years that he would compile forecasts for analysis. He went to websites – weather.com, Accuweather, the National Weather Service and others – to collect data, then calculated the most probable outcomes.

Rader, a Colorado State University climate dynamics doctoral student, didn’t know then that he was creating an ensemble forecast. “It’s funny how much of what I did as a kid is actually, in a lot of ways, what I do now.”

It wasn’t until his parents told him, however, that Rader understood his obsession could be his career. Now the Department of Energy Computational Science Graduate Fellowship (DOE CSGF) recipient applies machine learning to atmospheric science and climate, probing predictability in forecasts extending months, years and beyond.

Machine-learning techniques are relatively new to his field, Rader says, so he can “explore their capabilities that we don’t fully understand yet and, maybe even more so, explore their limitations.” One aspect of Rader’s research is capsulized in the difference between two phrases, this looks like that and this looks like that there. They relate to interpretable neural networks, which seek to resolve a major machine-learning issue: how it works is mostly a mystery.

In simple terms, researchers feed neural networks and other machine-learning models known information – in this case, weather maps and data. This trains them to identify similar features in unlabeled, previously unseen data, then extrapolate predictions from them. The approach is powerful, but “the how-and-why the model makes its predictions is inscrutable,” says a July 2022 Journal of Advances in Modeling Earth Systems paper Rader coauthored with his advisor, Elizabeth Barnes, and two others. Few models are always perfect, Barnes says, so scientists need reasons to trust their results. Interpretable models provide them, with information on how the algorithms reached their predictions. “Purely understanding the approach can be incredibly helpful for gauging trust” in a model’s output.

Machine-learning models also can be overfit – learning to rely on meaningless noise that doesn’t reflect the real physical world. To make a network interpretable, researchers usually constrain the model, forcing it to learn in specific ways, Barnes says. “You’ve tied its hands, if you will, a bit.” Those constraints, however, often provide a better result because the model is more likely to learn the real physical relationships rather than spurious ones.

Rader says the interpretable machine-learning approach “comes from the idea that we can make neural networks that think a little bit like us,” helping scientists understand more complex aspects of climate and climate predictability.

For the paper, the team extended the prototypical part network (ProtoPNet) that Duke University’s Chaofan Chen and colleagues described in a 2019 publication. ProtoPNet’s approach, focusing on bird classification, is this looks like that. Much as a human ornithologist would, the technique identifies the species of previously unseen birds by comparing images of them with prototypes – examples of known species from training data.

The advantage: ProtoPNet’s decisions can be linked to features found in both the input bird image and in a relatively small set of species-specific training prototypes, the Advances in Modeling Earth Systems paper says. That shows how the algorithm made its prediction.

Barnes, Rader and their colleagues added location to ProtoPNet, extending it to this looks like that there and naming it ProtoLNet, for prototypical location network. For weather and climate forecasting, a neural network must do more than identify similar features in data, Rader says. The features also must be in similar locations. Greater convection and rainfall over the western Indian Ocean, for example, may herald a cyclical rainfall pattern. In another place, such as over land or another ocean, they may indicate something different.

With ProtoLNet, researchers can see the set of past patterns the code used to produce a forecast. The team believes the approach is useful for science, but “we also hope it will inspire other scientists to improve upon these methods.”

Now Rader is devising an interpretable neural network from scratch, Barnes says, exploring how to “develop other tools that are applicable to different types of climate problems that the this looks like that there algorithm is not right for.”

Life has forced Rader to innovate. He was 18, a high school cross-country runner, when he felt ill on a Sunday night and went to bed. He rose when he realized something was wrong. What happened next, he says, was “almost cinematic, like ‘steps out of bed, the next step isn’t quite as strong, the third step is, oh man, I need to lay down on the ground.’”

Rader had transverse myelitis. Doctors believe his immune system attacked his spine, damaging its nerve fibers and paralyzing his lower body.

Going from able-bodied to paraplegic in minutes was difficult, but Rader says he benefitted from the chance to radically improve. It was “a huge challenge where I get to get really, really good at something.” Each day he could “experience being better, even if that started off as just being, ‘now I can sit up.’”

Rader spent the summer after his University of Washington freshman year studying weather and climate at the University of Oklahoma. For another three summers he was at the University of Colorado Boulder under SOARS (Significant Opportunities in Atmospheric Research and Science), a program for underrepresented groups. “That was when I made the transition from wanting to do weather and weather forecasting to being more interested in large-scale climate problems.”

During his 2021 virtual Pacific Northwest National Laboratory practicum, Rader worked with earth scientist Po-Lun Ma to improve how autoconversion – cloud droplets colliding to form rain – is represented in DOE’s Energy Exascale Earth System Model (E3SM).

Autoconversion and how aerosols – tiny airborne particles – affect it lead to uncertainty within earth systems models, says Ma, who oversees a multi-institutional effort studying aerosols and their interactions with clouds. Present models use a 20-year-old approach based on data from a single cloud type. Studies found they don’t effectively describe autoconversion under other conditions.

Rader used cloud measurements from DOE and other agencies to calculate autoconversion rates under various circumstances. He identified important factors affecting autoconversion and built a prototype machine learning-based emulator that accurately simulates it, but with a low computational cost.

Lab researchers will evaluate the approach’s performance, including within E3SM, DOE’s flagship Earth systems model, to determine whether it improves on the standard method. “Then this can be part of the next-generation E3SM,” Ma says.

Rader expects to graduate in 2024. He’d like to continue working with machine learning, but he avoids making plans because they rarely develop as expected. Instead, “if you just ride the wave, you’ll get to the right spot.”

Alongside research, Rader visits elementary schools a few times each year to talk about weather with students. His presence shows “you don’t have to be a typical white-lab-coat scientist we see in old books. You can be a young disabled scientist.”

Barnes marvels at Rader’s energy, enthusiasm and quick grasp of difficult concepts. “He’s undaunted by technical skills that he may not have yet,” eagerly learning new approaches. “That’s something that’s so fun with him.”

Rader spends much of his off-research time playing and traveling with a sled hockey team. “It’s the sport that most emulates the feeling of running,” he says. When racing across the ice, “it’s the closest thing to freedom that you can get.”

About the Author

The author is a former Krell Institute science writer.

You must be logged in to post a comment.