A split nanosecond

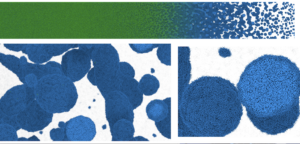

Top: In a process that lasts about half a nanosecond, supercritical particle size is rendered in proportion to density (green is supercritical-fluid vapor, blue supercritical liquid), removing the vapor phase and leaving only liquid droplets (bottom). (Visualization: Sandia National Laboratories.)

Imagine a computer simulation of molten aluminum at a scorching temperature, portraying nearly 1.5 billion atoms. The model consumes multiple days, running on nearly 8,200 graphics processing units (GPUs) on the National Nuclear Security Administration’s Sierra supercomputer at Lawrence Livermore National Laboratory in California. And yet the whole shebang captures atomic behavior that spans but half a nanosecond of real-world physics.

You have entered the world of large-scale atomistic simulations – of Stan Moore, Mitchell Wood and Aidan Thompson at Sandia National Laboratories in New Mexico. Here, strange things happen at extreme temperatures, when fluids become so hot that the distinction between liquid and vapor disappears, a condition known as supercritical.

Simulating such short time and length scales helps scientists grasp how supercritical fluids cool to form and grow liquid droplets and vapor bubbles. The Sandia team uses a code called LAMMPS (large-scale atomic/molecular massively parallel simulator) for such work.

The project is supported by the NNSA’s Advanced Simulation and Computing (ASC) program, which helps to ensure the safety and reliability of the nation’s nuclear weapons stockpile. ASC’s Large Scale Calculation Initiative, which partially supported the effort, emphasizes the efficient use of supercomputers for massive computations.

In recent decades, molecular dynamics simulators like LAMMPS have become widely used in fields ranging from physics and chemistry to biology and materials science. MD codes help researchers address questions about phenomena that occur under extreme conditions, including the processes behind nuclear fusion, planet formation and material design and manufacture.

“We’re trying to apply our simulation efforts where they make the best alignments to experiments, but also give us insight where the experiments can’t,” Wood says.

Even the unique experiments conducted at Sandia’s Z Pulsed Power Facility sometimes need help. The Z machine produces conditions of high temperature and pressure found nowhere else on Earth.

Materials in these situations typically are modeled with continuum hydrocodes, which are used to run especially demanding, highly dynamic simulations. But hydrocodes are ill-suited for capturing the liquid-vapor separation phenomena that sometimes arise in Z machine experiments.

“MD simulations can help us understand how to improve some experiments on Z and improve our scientific insight into these problems,” Wood says. “We normally can’t simulate the full experiments with molecular dynamics, so we’re trying to use a multiscale method where smaller length scales inform higher length and timescale methods.”

One of the simplest models that the team uses is called the Lennard-Jones (LJ) potential, which accounts for most of the relevant physics involved in the atomic interactions of fundamental materials. “That becomes the basis for comparison,” Wood says. “If we want to improve our physical model in MD, we always compare it back to the original LJ calculation.”

When researchers need more accuracy than LJ can provide, they fit a machine-learning model called SNAP (spectral neighbor analysis potential), which Thompson originated, for the material of interest. The team generates its machine-learning training data from density functional theory (DFT), a quantum mechanical atomistic simulation method.

The SNAP model sees thousands of physics examples it needs to learn to correctly simulate material behavior. For example, the model for supercritical aluminum involved approximately 800,000 configurations of DFT training data.

“One challenge at the beginning was that our machine-learning model in LAMMPS was really slow and didn’t achieve a large fraction of peak on GPUs,” Moore says. But through the Exascale Computing Project, a large collaboration of experts from industry, academia and the national labs, the team made the LAMMPS machine-learning model more than 30 times faster on NVDIA’s V100 GPU. “That enables longer simulations, and we can achieve more physical insight that way.”

To make the most of their time on Sierra, the researchers built simulations so that they can obtain valuable information in three or fewer runs.

“We run on a large fraction of these machines for a few days and that’s all the time we get,” Wood says. “Setting up the one simulation that gives us the biggest physical insight is the challenge.”

The team also studies materials besides aluminum. A billion-atom carbon simulation was a finalist for the Association of Computing Machinery’s 2021 Gordon Bell Prize for outstanding achievement in high-performance computing. That project used more than 27,000 GPUs for 24 hours on the full Summit supercomputer at the Department of Energy’s Oak Ridge Leadership Computing Facility, simulating one nanosecond of physical time. Nine co-authors contributed to the paper describing that run, including Moore, Wood, Thompson and colleagues at the University of South Florida, NVIDIA Corporation, the National Energy Research Scientific Computing Center and Sweden’s Royal Institute of Technology.

The 2020 Gordon Bell Prize recognized a copper simulation as an advance in molecular dynamics machine learning. But 2021’s billion-atom carbon simulation ran more than 20 times faster than the 2020 prize winner.

“What I find particularly exciting about molecular dynamics is that it gives you access to information that you cannot get in other ways,” Thompson says. When scientists do experiments, they extract data about nature, “but it comes packaged in a lot of layers that obfuscate what’s happening. In reality, we just get partial information.”

And often, theory and experimental results fail to match.

Thompson notes that it’s hard to know whether the differences are due to limitations of theories or in experiments. MD provides a way to cut through the uncertainty, “like running an experiment on a fictitious universe that’s similar to the one we actually live in. We still have problems, of course, because that fictitious universe doesn’t exactly match up to the real one we live in. But we’re getting much better at closing that gap.”

Such approaches hold great promise, Wood says. “I view MD and also computational simulation as one of the fastest-growing avenues to do research.”

About the Author

Steve Koppes is a former science writer and editor with the University of Chicago, Arizona State University and the University of Georgia. He’s been a frequent contributor to Purdue University’s College of Agriculture and is the award-winning author of Killer Rocks From Outer Space.

You must be logged in to post a comment.