Boxing in software

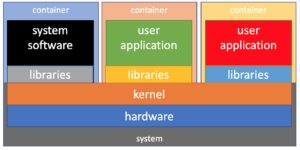

Containerized applications all have equal access to the system except their own software stacks. (Schematic: Tim Randles, Los Alamos National Laboratory.)

A well-known saying among Silicon Valley entrepreneurs is “move fast and break things.” That approach, however, doesn’t work for those managing and using Department of Energy supercomputers, machines at the core of the nation’s science and security missions. So how to promote user innovation while maintaining system reliability?

“Linux containers,” which isolate programs in separate computing environments, says Tim Randles, a Los Alamos National Laboratory (LANL) scientist. He’s helping lead what he calls “the container revolution in high-performance computing,” or HPC.

As a computer systems technology, Linux containers adapted for HPC grew out of the competing needs of HPC users and system administrators. In 2015, Randles was in the latter role, overseeing LANL’s supercomputers while supporting user software needs. “We wanted to provide more flexibility in our HPC platforms. But only software with high demand justified the sysadmin effort for installation and maintenance.”

Randles and colleagues recognized that this approach limited experimentation and new science. Yet, they had to balance this with the fact that every new piece of software loaded onto one of LANL’s HPC systems could either break user applications or require a total reboot.

“It’s painful to have to reboot an HPC system,” Randles says. It can cost anywhere from several hours to a day or more in lost computing time. “As a systems administrator I cared about the robustness and uptime of my machine and ensuring that it’s doing science, because if it’s not doing science then we’re failing our mission.”

The problem, he says: A supercomputer’s system services and applications all were coupled tightly in common software and runtime environments. In essence, once loaded, a user’s program became part of a bundled system in which, like pulling part of a spider’s web, changing one component could affect all the others.

The solution, in system administrator speak, was to use containers to divorce the user runtime from the system stack. “A Linux container uses technology that gives an application running on a system a different view of that system’s resources,” Randles says. Container technology delivers a Lego-like, plug-and-play approach to software innovation. Users can bring their own software, or user-defined software stacks, and input it to a supercomputer. The container, meanwhile, keeps the two software environments separate, even while “users still have access to everything in the HPC system that makes it special.”

The first Linux software containers, such as the popular open-source Docker code, weren’t useable on HPCs, he says. A core reason was security. Docker let users have administrator privileges to the system kernel, the heart of the operating system and the layer separating applications and hardware. “In our environment,” Randles says, “this is a no go. Our security people freak out.”

To overcome this hurdle, in 2017 Randles and LANL staff scientist Reid Priedhorsky invented Charliecloud, the lab’s powerful open-source code for building and using unprivileged HPC containers.

“Reid’s insight was that we could use new Linux kernel features to let users bring their own software stack and deploy it on our system without ever crossing any privilege boundaries,” Randles says. Charliecloud creates completely unprivileged containers that use the supercomputer’s hardware without requiring administrator privileges. “The kernel continues to maintain the security of the system.”

Introducing a new supercomputer user environment inevitably leads to issues, and Charliecloud was no different. “If you start messing around with how people get their job done, they get nervous,” Randles says. “However, what we’re finding through experience with Charliecloud is that users like it.” Indeed, in the past five years the container has matured from a “radical idea” for LANL’s HPC users to “how we do business on all our production systems,” says Randles, now a lab program manager.

This transition was partly facilitated by a 2019 paper in which Randles, Priedhorsky and LANL colleague Alfred Torrez showed that HPC containers have minimal or no impact on supercomputer performance, ensuring users get greater software flexibility with no loss in speed.

Moreover, Randles says, containers provide greater reliability in the multi-user environment of a national lab supercomputer. For example, when running a complex physics simulation, such as one depicting a supernova, computational scientists use a mix of their own code and that the supercomputer center supplies, often including dozens of libraries and compilers. In a shared environment, however, libraries often are updated and changed, something that users must track.

“Users have more control with the container technology,” Randles says. “If I put libraries in the container, I’m sure that all of those libraries stay the same, so my application will keep running regardless of what the systems administrator does.”

Container technology has evolved beyond the user-system interface to become a systemwide approach to partitioning a supercomputer’s components. “In a containerized world, a software package needs to only be updated in affected containers,” Randles says, “which can then be restarted without rebooting the entire system or breaking a user’s application.”

Given the challenge of rebooting an exascale machine, this systemwide container approach has come at just the right time. “The sheer size of exascale systems makes running them difficult. Linux containers are one technology we’re adopting to make that easier,” he says.

The container revolution is spreading. All DOE labs now have container efforts, and Charliecloud has gone beyond the foundation for production HPC at LANL. Groups ranging from the Texas Advanced Computing Center to the University Corporation for Atmospheric Research have adapted it to improve the balance between scientific innovation and system reliability.

Looking back on a half-decade of successful container development, Randles says the focus now has shifted from the heavy lifting of introducing a new way of operating to optimizing container technologies and working with vendors to make them easier.

Editor’s note: Reprinted from ASCR Discovery.

About the Author

Jacob Berkowitz is a science writer and author. His latest book is The Stardust Revolution: The New Story of Our Origin in the Stars.