Labeling climate

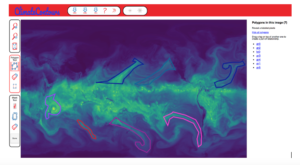

Users logging on to ClimateNet’s web-based labeling tool, ClimateContours, view computer-simulated weather images they can label with polygons to classify tropical cyclones, atmospheric rivers and other features. Researchers will feed tens of thousands of these curated images to a deep-learning algorithm that can grasp which combinations of features are most relevant in classifying about 10 distinct weather and climate patterns. Users can also create new labels for other events of interest and name them. Further, they can rate their level of confidence for all labels, which is useful information for training deep-learning models. (Image: Lawrence Berkeley National Laboratory.)

Weather is notoriously difficult to predict, but it’s not for lack of data. Satellites generate hundreds of terabytes of images daily. Add to that minute-by-minute changes in temperature, wind speed and other variables from every corner of the globe, and the information swamps traditional predictive models.

But what if the machines we use to predict weather and climate can learn what to look for? That’s the goal of ClimateNet, a project launched last year at the National Energy Research Scientific Computing Center (NERSC), a Department of Energy Office of Science user facility at Lawrence Berkeley National Laboratory (LBNL).

ClimateNet, the brainchild of project leader Karthik Kashinath and NERSC colleague Prabhat, applies the power of machine-learning and deep-learning techniques to identify important weather and climate patterns. The methods will analyze expert-labeled, community-sourced open climate data sets, such as those generated by the community atmospheric model (CAM) based at the National Center for Atmospheric Research in Boulder, Colorado. “We are proposing a paradigm shift,” says Prabhat, leader of NERSC’s Data and Analytics Services Group. “Instead of relying on humans to specify the definition of a weather event, we are going to let a deep-learning system make that call.”

Modern climate simulations like CAM are broken into a million grid points, with each point represented by a dozen or more variables. All of these data are stored every three hours, every day of the year. “There is an amazing spatial and temporal fidelity in these data sets,” Prabhat says. “But because it’s almost impossible to parse using traditional methods, what people end up doing is essentially collapsing all of this information – petabtyes and petabytes of data – into a few numbers so that policymakers can know by how much the mean global temperature number or the average sea level will rise over the next century.”

That’s not to say these boiled-down statistics are of no value, Prabhat adds. “But when people think about how extreme weather and its impact change, they will need to start looking at these high-fidelity spatial-temporal patterns.”

Although experts generally agree on criteria for, say, a hurricane, uncertainty rules in mid-latitude cyclones and other weather patterns. Scientists’ views of weather are also often clouded by specific geographic concerns. For example, Japanese scientists’ definition of a hurricane is based on characteristics of Pacific storms; in the United States, modelers look at Atlantic-centric factors. Not least, weather-event definitions must evolve as the climate changes, Kashinath says. “We need to have methods that are agnostic to the climate that we’re dealing with now so we can pull out these events in any future climate.”

Machine-learning and deep-learning methods can look for certain geometric characteristics in the data apart from pressure, temperature, humidity or energy. “Deep-learning algorithms use more abstract representations that work across different data sets, geographical regions and climates,” Kashinath says. “They are more robust and reliable and, importantly, much faster.”

Machine learning and deep learning work best in a so-called supervised mode, in which models are trained on reams of sample data to seek patterns that are consistent across labeled objects. “A classic example,” Kashinath explains, “is when you tag your friends on Facebook photos and the social network’s algorithm then learns to identify their faces and automatically tag them in future photos.”

The goal of ClimateNet is to provide the sample data for this training. Kashinath, Prabhat and their team have begun enlisting human experts to examine 100 years of simulated community-model images and associated data and hand-label systems as hurricanes, atmospheric rivers and other events of interest. Kashinath estimates that 10,000 to 100,000 categorized images will create a database large enough for their deep convolutional neural network model to learn which attributes are most relevant in classifying 10 or so distinct classes of complex global weather and climate patterns.

“Unlike some supervised machine-learning tasks such as computer vision, you can’t take random people off the street, show them an image and have them do it,” Prabhat says. “With climate data, you need to look at an image but also consider other fields such as temperature, humidity, pressure and so forth. It’s not a simple task.”

To generate the curated images, the ClimateNet team has modified a Massachusetts Institute of Technology-developed tool called LabelMe and created a web interface called ClimateContours. Undergraduates who’ve taken courses in atmospheric science, plus graduate students, postdoctoral researchers, faculty members and working professionals can all log on and participate. “Initially, we’re hoping to get a hold of a few dozen folks in the climate community,” Prabhat says, “and eventually a few hundred.”

To jump-start the project, the team has held hackathons at labs, universities and conferences, inviting researchers to spend time labeling data and getting familiar with the ClimateContours interface. Kashinath and Prabhat unveiled ClimateContours at last year’s American Geophysical Union meeting in Washington. After presenting a talk about their work, the two set up a labeling workshop to solicit feedback from climate scientists. “The response was very positive,” Kashinath says. Some expressed interest in using ClimateContours in meteorological classes to improve students’ ability to detect events visually and to better understand them. Others wanted to upload their own data, a feature the team is working to include in future releases.

“Better analysis tools will tell us more about how and when extreme weather events form and how intense they will be,” Prabhat says.

Kashinath agrees. “The goal is a model that can predict weather events. The hope is that someday organizations like the National Weather Service can tap into this database for their operational forecasts.”

About the Author

Chris Palmer has written for more than two dozen publications, including Nature, The Scientist and Cancer Today and for the National Institutes of Health. He holds a doctorate in neuroscience from the University of Texas, Austin, and completed the Science Communication Program at the University of California, Santa Cruz.