A fusion fix

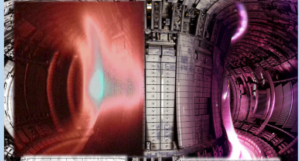

A plasma disruption in experiment on JET, left, and disruption-free experiment on JET, right. Assigning weights to the data flow along the connections between nodes can train a neural network to predict disruptions. (Image courtesy of Eliot Feibush, Princeton Plasma Physics Laboratory.)

A Department of Energy Computational Science Graduate Fellowship (DOE CSGF) recipient is the lead developer for software that could be vital to the success of plentiful, clean fusion energy.

Julian Kates-Harbeck, a Harvard University doctoral candidate in physics, launched the project as part of his 2016 DOE CSGF practicum at the Princeton Plasma Physics Laboratory (PPPL), a DOE facility. The lab is part of an international effort to harness nuclear fusion, the same process that powers the sun, to generate electricity.

One of the leading fusion reactor designs is a tokamak, a donut-shaped chamber that uses powerful magnets to hold plasma – a mixture of ions and electrons – heated to temperatures hotter than the sun’s interior. Under these extreme conditions, hydrogen nuclei fuse, releasing tremendous energy.

But the hot plasma can escape the magnetic field, stopping the fusion reaction and damaging the reactor walls. Kates-Harbeck is the lead architect for software that uses artificial intelligence to monitor the reactor’s operation and predict these disruptions, triggering steps to avoid or mitigate them. A second DOE CSGF recipient, Princeton University’s Kyle Felker, also assisted with the project during his 2016 PPPL practicum, offering insights into plasma behavior and helping compare the algorithm with alternative tools.

The program, called Fusion Recurrent Neural Network (FRNN), applies deep learning, a leading-edge, powerful form of artificial intelligence. Neural networks excel at analyzing diverse sources of multidimensional, time-dependent data, says Kates-Harbeck, who studied artificial intelligence and machine learning as a Stanford University master’s degree student.

During his practicum with PPPL’s William Tang, Kates-Harbeck worked to find sensor data, format them, process them and normalize them for input to the deep-learning algorithm. The code analyzes the information to identify factors leading to a disruption. With that data training, it should be able to spot signs of an oncoming disruption and help head it off.

But “training deep-learning algorithms on lots of data takes a lot of time,” Kates-Harbeck says. Running the initial model took up to 24 hours, even when the computer’s central processing unit had help from a graphics processing unit (GPU), a chip that accelerates repetitive calculations. That’s too long. “To find a model that works well, you have to tune the parameters” in an iterative process to find the combination that best predicts a disruption. “Different parameters give different performance, and you want to find the best model, so you have to train many, many models in parallel.”

To speed the calculations, Kates-Harbeck wrote a distributed training algorithm that uses multiple GPUs. In practice, the algorithm scales well, cutting computational time in direct proportion to the number of GPUs it uses.

Using training data from the Joint European Torus fusion reactor, the researchers have shown the algorithm predicts disruptive events more accurately than previous methods while reducing the number of false alarms, a PPPL release says. It has been tested on some of the world’s biggest high-performance computing (HPC) systems, including Oak Ridge National Laboratory’s Titan Cray XK7, the most powerful machine in the United States, and scaled well on thousands of GPUs.

Kates-Harbeck says the next step is to use FRNN on real-time data on a working tokamak. That will require ensuring the code can interface with many different reactors, especially ITER, the international project to build a giant reactor in southern France. ITER is so large and expensive “you can’t just run it thousands of times and let it disrupt to build a training data set,” he adds. “You have to have a working disruption predictor before you even start the machine because only a handful of disruptions at high energy can destroy” it.

ITER wants its program to correctly predict disruptions 95 percent of the time while generating fewer than 3 percent false alarms – a demanding standard. “You need to be confident that you can train a model without original data,” Kates-Harbeck says, but with data from other reactors instead, “and expect the performance to translate onto a new machine, which has new parameters, new physics.”

Kates-Harbeck is pursuing the FRNN project while also conducting doctoral research to model the dynamics of how ideas spread across social networks.

About the Author

The author is a former Krell Institute science writer.