Borrowing from the brain

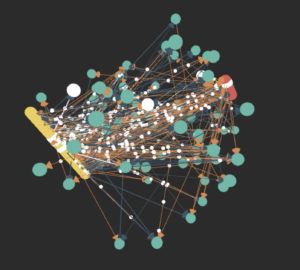

A spiking neural network depiction in which input neurons (line of yellow spheres lower left) and output neurons (red, upper right) are linked by hidden neurons (teal) and synapses (arrows). The excitatory synapses (orange arrows) highlight pathways where information is more likely to travel, while data is less likely to travel along inhibitory synapses (blue arrows). White areas highlight spikes and firing neurons. This spiking neural network was trained to classify high energy physics data. (Visualization: Catherine Schuman/Oakridge National Laboratory and Margaret Drouhard/University of Washington.)

Her surroundings have inspired Catherine (Katie) Schuman’s career. Now a research scientist at the Department of Energy’s Oak Ridge National Laboratory, just 30 miles from where she grew up, Schuman works at the forefront of neuromorphic computing, building artificial intelligence algorithms for processors that mimic the brain’s form and function.

Schuman focuses on algorithms and software but also works closely with engineers and materials scientists designing and developing these processors “to influence what the underlying hardware is going to look like,” she says. She also thinks about questions more open-ended than hardware. “If I could have a neuromorphic computer that looked like what I wanted, what would the algorithm be?” Last year, Schuman received an Early Career Research Award from the DOE Office of Science to support this work.

Schuman’s computing interests started at home, where her father, a middle school math teacher who advised the computer club, had the latest technology. Both of her siblings work in the field, and during high school she interned with her brother’s company before heading to the University of Tennessee, Knoxville.

As a middle school student, she also was fascinated by the work of ORNL geneticist Liane Russell and briefly considered following in her footsteps. Instead, Schuman brought those interests to nature-inspired machine learning, a field in which researchers use biological principles to design new algorithms.

When she started graduate school at Tennessee in 2010, Schuman discussed her interests with professors steeped in emerging neuromorphic hardware – processors laid out with neurons and synapses, like the brain. With memory and computation combined, such designs are tailored for bio-inspired algorithms and can be thousands of times more energy efficient than standard hardware. That helped her get in on the ground floor of neuromorphic computing.

Schuman’s machine-learning work relies on genetic and evolutionary algorithms, which apply some of Darwin’s principles to optimize a neural network’s performance. Computers introduce random changes, or mutations, into a population of algorithms or recombine various components. “Sometimes that results in a better-performing network,” Schuman says. “Sometimes that destroys the functionality.” Once the computer evaluates those changes, the best-performing algorithms survive as parents that evolve into the next generation.

Like other types of machine learning, neuromorphic computing relies on artificial neural networks but a specific type: spiking neural networks. Instead of continually passing along information, these algorithms can retain information, transmitting it only once it reaches a critical threshold – spiking like neurons in living brains. This feature conserves power because processors use energy only when the neuron fires.

To develop algorithms for processors still in development, Schuman uses high-performance computing systems such as the Oak Ridge Leadership Computing Facility’s Summit to develop models of neuromorphic chip architectures. In that simulated environment, she trains and improves spiking neural networks. Once this this initial training and optimization process is done, Schuman and her colleagues deploy these algorithms onto real-world prototypes.

Schuman’s already working on neuromorphic- processor applications, teaming up with ORNL’s National Transportation Research Center to test whether these small, low-powered chips could optimize fuel injection in engines. This smart engine could learn from road conditions and driving patterns, adjusting how much gas is burned over time. “We see some interesting results on improving the fuel efficiency in certain cases,” she says. “Now it’s time to go back to the algorithms and make them learn in the loop as the engine is running.”

Projects like this help Schuman focus on the many practical uses of neuromorphic chips: as co-processors in supercomputers, in navigation systems for autonomous vehicles and robots, and even in watches and wearable or implantable medical devices. All these systems could benefit from low-powered processors that analyze real-time data and provide feedback, she says. But she’s also interested in how neural network algorithms learn and their synaptic plasticity.

She also expects that the exchange among software developers, hardware engineers and materials scientists will yield diverse ideas and potentially novel ways of computing for years to come. For example, one ORNL materials science group has developed bubble-like synaptic devices built from lipids, molecules that mimic the fats, proteins and other compounds in a cell membrane. “It works like a chemical synapse in the brain. That has properties that we never even thought about a computer having before.” The chips’ adaptability is their key strength, she says, because researchers will be able to customize processors for specific applications.

Besides her research, Schuman also helps teach middle- and high-school students, particularly girls, about machine learning and how it affects their lives. She taught a class through Oak Ridge CS Girls, a program that until recently met in-person on Saturdays. After the classes moved online during the coronavirus pandemic, they included students from across the United States. Schuman hopes to expand that project to include a class on neuromorphic computing. “I want to get people interacting with this new type of computing and change what people think is possible with computers from a young age.”

About the Author

Sarah Webb is science communications manager at the Krell Institute. She’s managing editor of DEIXIS: The DOE CSGF Annual and producer-host of the podcast Science in Parallel. She holds a Ph.D. in chemistry, a bachelor’s degree in German and completed a Fulbright fellowship doing organic chemistry research in Germany.