Cloud forebear

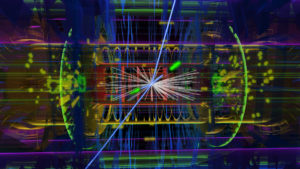

Muons (long blue tracks) and electrons (short blue tracks with matching green clusters) in a Higgs-like-decay from an ATLAS experiment. ATLAS is one of detectors at the Large Hadron Collider at CERN in Geneva, Switzerland, and takes advantage of the PanDA workload management system. (Image: CERN.)

Earth’s most powerful subatomic particle accelerator, the Large Hadron Collider (LHC), feeds a hyperintensive data workload-management system called PanDA. Thanks to the Department of Energy, both are now further linked to one of the world’s fastest supercomputers.

Organizers of that effort are now extending it to other top high-performance computing (HPC) systems, perhaps pointing the way toward exascale computing a hundred times more powerful than today’s best machines.

The LHC is a 17-mile underground racetrack near Geneva, Switzerland, that since 2009 has been smashing together protons and ions at near light-speed and unprecedented high energies. The project aims to reveal fundamental particles that can’t exist under today’s calmer conditions on Earth.

In 2012, physicists working with the LHC famously documented the Higgs boson, the last major missing piece of what theorists call the Standard Model. After a two-year break starting in 2013, the LHC resumed operations at almost double its previous energy – up to 13 trillion electron volts. In Run 2, physicists are learning more about the Higgs and seeking new and perhaps unexpected particles.

The search requires sifting unimaginable numbers of fragments from proton smashups that occur 600 million times each second. That’s too many collisions to record with available technology, says Kaushik De, a University of Texas at Arlington high-energy physicist. “We can only record and save about a thousand per second with highly specialized electronics and computing equipment” that automatically decides what to reject, he explains.

Non-rejected candidates of interest become the raw data that then undergo further processing before finally becoming available for analysis. De is among up to 3,000 physicists worldwide doing such analyses for ATLAS, one of the LHC’s two detectors.

Since the LHC began, De’s ATLAS group has benefitted immensely from the PanDA workload management system he also helped create. PanDA (for “production and distributed analysis”) now links about 250,000 computer cores shared by ATLAS physicists through an increasingly seamless worldwide network of approximately 150 data centers.

Its developers also “built the whole idea of clouds” for distributed computing and data storage into PanDA “long before Google and Amazon,” De notes. Alexei Klimentov, a Brookhaven National Laboratory physicist who also fathered the system, says Panda is “an absolutely unique system, and it is practically done automatically.”

De says that “the whole magic of workload management is that a physicist never knows where the processing is taking place. In an office next to me there could be a graduate student doing (LHC) analysis. That student doesn’t know that the data are actually sitting in Romania, Italy or Taiwan, and the computers doing the processing are in Switzerland or Germany.”

By 2016 PanDA’s data processing had reached 1.4 exabytes (one billion billion) a year, reports Klimentov, making it “the only system of its kind in science that is able to work on this scale.”

But the LHC’s expanding second-run output also poses a potential roadblock. “We realized the 250,000 cores we have would all be needed for the processing and analyzing of raw data,” De says. “So we suddenly faced this huge resource shortfall: How do we do our simulations?”

De refers to Monte-Carlo simulations, which employ algorithms that rely on random sampling. “We do billions and billions of simulated collisions. We end up with billions and billions of both real raw and simulated data. Then we compare them. The simulations also require lots of computing.”

So, the PanDA team began considering the supercomputers at government facilities, which do simulations very well. The researchers approached colleagues at DOE’s Oak Ridge National Laboratory, home of Titan, an HPC system with a peak speed of 27.1 petaflops, or 27.1 quadrillion calculations per second. Titan by itself (is) about the same size as all the ATLAS resources,” De says.

With about $3 million from the DOE Office of Science’s Advanced Scientific Computing Research program, De, Klimentov and colleagues from Brookhaven, Texas-Arlington and Oak Ridge launched what is called BigPanDA. They embarked on the arduous tasks of modifying long lines of PanDA code and connecting to a supercomputer designed to be isolated.

The results have been encouraging. In the past year and a half, ATLAS modelers have been using “on the order of 10 million of Titan’s CPU (central processing unit) hours every month,” De says.

He, Klimentov and others have now undertaken a follow-up project called BigPanDA ++ to similarly tie in other supercomputers. The list so far includes Edison at Lawrence Berkeley National Laboratory, Mira at Argonne National Laboratory, Anselm in the Czech Republic and SuperMUC near Munich, Germany.

They’ve also interested researchers outside high-energy physics to use linked HPC centers to advance fields such as molecular chemistry, advanced biology, materials science and bioinformatics.

“I think as we move toward exascale computing we will have to take that into account,” De says. “To go to 100 times larger than anything that exists today, we’re not just talking about making your chips even smaller. You really have to think about computers that work with others, even if they are in a different location, on a global scale.

“That’s what PanDA proved.”

About the Author

Monte Basgall is a freelance writer and former reporter for the Richmond Times-Dispatch, Miami Herald and Raleigh News & Observer. For 17 years he covered the basic sciences, engineering and environmental sciences at Duke University.

Impressive, to understand the LHC is close to GOD particles? Exceptional article. Thanks. J