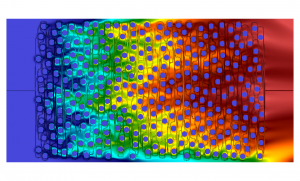

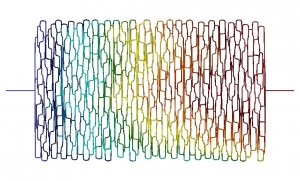

A Reeb graph (above) provides an overview of particle flow during the simulation by summarizing all possible paths of particle flow through a two-dimensional space packed with uniform spheres (right). The technique allows investigators to avoid downloading much of the simulation data. (Lawrence Berkeley National Laboratory.)

Here’s a challenge: Try understanding the action in a movie that has 999 of a thousand frames missing. It might be possible to glean some idea of what’s happening, but the missing frames are likely to contain information crucial to comprehending the plot.

That’s the situation facing high-performance computing (HPC) as it moves to the petascale (quadrillions of calculations per second) and beyond. Many simulations already generate much more data than can be effectively stored and analyzed.

To cope with the situation, researchers sample regular time steps and likely miss interesting behavior. It’s akin to stepping out for popcorn just when Darth Vader tells Luke Skywalker he’s his father. Nothing that happens afterward makes much sense without the missing information.

This disconnect is inspiring Lawrence Berkeley National Laboratory’s visualization group to create a new generation of data analytics and visualization to meet that challenge.

As HPC reaches toward the exascale (a thousand times faster than petascale), a vastly increased number of essentially independent computing cores will make data movement a major bottleneck, says Gunther Weber, a research scientist in the Berkeley Lab visualization group. The current pattern of serially running a large simulation, dumping the data to disk and then doing post-processing analytics and visualization must change, he says; analytics will need to be built into simulations.

As a first step toward that goal, Weber and his research team are embedding data analytics into NYX, a simulation used to explore the behavior of matter in deep space. NYX, developed by Ann Almgren and her colleagues at the lab’s Center for Computational Sciences and Engineering, uses a technique called adaptive mesh refinement in a nested hierarchy of uniform grids that follows matter distribution in both space and time. The cosmology simulations, led by Peter Nugent, Berkeley Lab senior staff scientist, aim to pin down the balance of forces that lead to matter clustering that matches deep space observations. (See sidebar, “Going deep.” [2])

“These are large, large simulations,” Nugent says. “You need to have the analysis tools embedded in the simulation because you can’t dump out this data every single time step and look at things. Any time you move from memory to somewhere else, it’s painful.”

A typical simulation run on today’s high-performance computers, such as Hopper, the National Energy Research Scientific Computing Center’s 1.3-petaflops Cray XE6, analyzes the behavior of 69 billion particles in three dimensions and generates about 20 terabytes of data per time step, Nugent says. A reasonably large data storage allocation at the computing center is about 100 terabytes, so at most it’s possible to read out five time steps.

Weber uses topological analysis, a technique that will initially help reduce the amount of data written to disk and will eventually help scientists determine where and when interesting behavior is occurring in the simulation. The embedded topology analysis studies the data in real time so that seemingly separate and distinct behavior can be understood as connected, and therefore is analyzed as such.

With embedded topology analytics, Nugent and the research team hope to run about 100 time steps and then read out a summary that highlights data of interest. The analytics are expected to let the team compress 20 terabytes to a few megabytes.

Currently, the simulation software can be instructed to concentrate resolution on interesting areas of space where dense clusters of matter are forming, based on a rather simple density measurement. To perform a more sophisticated analysis at a higher level of physics, Weber and Nugent are designing the embedded analytics to perform without a research team intervening. Nugent says the embedded analytics will not only summarize terabytes of data but also guide the simulation.

The first step – fully embedding the analytics into NYX – is nearly finished, Weber says. “By being forced to integrate the data analysis into the simulations, these results become available during the simulation, and there are very interesting possibilities to use the results to affect the calculations. We are more than 90 percent there.”

Besides selecting time points for analysis, the Berkeley Lab team – which includes David Trebotich, a computational scientist in the applied numerical algorithms group; Daniel Ushizima, a research scientist studying carbon sequestration; and post-doctoral scientist Dmitriy Morozov – is studying whether analytics can annotate data sets. The goal is to set up simulations that calculate full physics on only a subset of the data, allowing the analytics to substitute a representation for some of the forces.

For example, in carbon sequestration simulations, researchers would like to simulate movement of gas through rock at a small-pore scale. But to look more realistically than that requires simulation of various sizes of pores and voids. The analytics and visualization allow extraction and visualization of representative summary data in the form of a Reeb graph, a three-dimensional, symbolic mathematical representation that doesn’t require a full download of the entire simulation.

“We’ve been talking about using this Reeb graph to extract information at the small scale and then using that result in another simulation that looks at larger-scale behavior,” Weber says. The detailed simulation would then provide the summary information of the full physics model at the pore level. In turn, that summary information would serve as a stand-in for the next run, making it possible to do simulations on a larger scale.

Both the carbon sequestration code and the NYX code are designed to be portable to the new processing, memory and interconnect technologies exascale computing requires. Indeed, NYX is an extension of the BoxLib framework that is being explicitly designed to port to exascale computing.

Says Nugent, “We are hopeful that in five to eight years’ time, we will be running on exascale machines.”